Interview: Peter Vogel

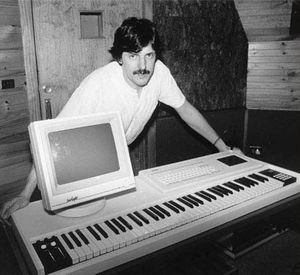

Left: Peter Vogel with the Fairlight Series III CMI

Right: Peter Vogel with daughter

Courtesy Peter Vogel

Together with Kim Ryrie, Peter Vogel was responsible for the design and development of the hugely influential Fairlight Computer Musical Instrument (CMI) which from 1979 brought the sound of digital sampling and sequencing to popular music audiences thanks to its use by artists such as Kate Bush, Peter Gabriel, Stevie Wonder, Herbie Hancock, Duran Duran and Jean-Michel Jarre.

Peter Vogel interviewed by James Gardner, 8 May 2010. Updated and corrected by Peter Vogel and James Gardner, May 2012.

James Gardner: Why, 30 years after its debut, have you revived the Fairlight CMI[1] ?

Peter Vogel: Since the invention of the internet, people have been able to find me quite easily. So every few days for the last ten or fifteen years I would receive an email from somebody somewhere in the world. I’d get something from Brazil from someone saying “I found you on the internet and I just want to tell you about what the Fairlight has meant to my musical career, and thank you for inventing it” and so on. This gathered a head of steam during 2009-10 and I thought that there must be a market for a vintage replica of the original Fairlight. Because there’s no way, really, of getting the same sound. There have been attempts to sample the sounds of a Fairlight into a contemporary sampler, but that doesn't really have the same effect.

Why is that?

You can obviously record and reproduce one note, but if you then try and do anything with it—like change its pitch or something—you won’t get the same characteristics as you did from the original instrument because—I guess like any other acoustic musical instrument—the Fairlight imposed all sorts of distortions and frequency colourations and so on into the sound as you were playing different notes and there’s a sort of dynamic effect that goes on. For example, the last Fairlight before this revival was only a 16-channel device and so if you were playing music with any sort of decay time you’d rapidly use up the 16 channels and so that would be allocating new channels, truncating old sounds and so on. So that had a particular effect. And because it used 16 physically distinct pieces of hardware to generate the 16 voices, each one of them would sound a bit different because of the analogue circuitry being different. And in those days it could be significantly different from one channel to another. So as it swapped and changed between the channels it would be quite a different sound quality coming out. So if you just take a representative sample and feed it into a device which substantially exactly reproduces what you put in, you get what I guess people describe as a ‘sterile’ version of the same thing.

So it doesn’t have the animation and the movement between the different voices?

Yes, and also as you changed the pitch of the note all sorts of different effects would come in and out. So just playing across the keyboard of the Fairlight, the sound quality varied quite dramatically from one end of the keyboard to the other. Which is an interesting effect as a musical instrument and of course all musical instruments are like that, which is what gives them their character. As opposed to taking one note into a sampler and just transposing the pitch, in which case you lose all that interesting colouration.

So the old Fairlight’s electronic quirks affected its sound just as the Mellotron’s mechanical and electrical idiosyncrasies gave it ‘character’, depending on the condition of the tape, the state of the playback heads and so on.

Yes. So what we’ve done with the new Fairlight is to model all the bits of hardware that were in the old one. When we started off with the Fairlight we didn’t have the luxury to buy a PC that did what we wanted. In fact you couldn’t buy a PC at all—it was a decade before the first PCs came out. So we had to design hardware that would do what we wanted. And in those days the state of the art was a 1MHz processor as opposed to these days where 3GHz is standard. So we’re dealing with a processor that was 1/3000th the speed of what’s modern. Not to mention that we were dealing with 8 bits instead of 16 or 24 bits, which is common these days.

So in order to make those processors do something that they couldn’t possibly do, things that were 1000 times outside their capability, we had to add a lot of hardware around that would make up for the deficiencies. And that hardware in those days was quite cutting-edge and we were using filters and ADCs [analogue-to-digital convertors] and so on. And at that time a 16-bit DAC [digital-to-analogue convertor] or ADC was very high tech. Getting it to sample at even 44.1kHz was a big ask and a lot of money. And the variation from one such DAC to another was potentially quite significant. So we ended up with a whole string of envelope generators, DACs, VCAs [voltage-controlled amplifiers], mixers, all that sort of thing in analogue circuitry to augment the digital sampling side of it. So what we’ve done with the new Fairlight is that we’ve modelled all those bits of hardware as well as we could, with all their imperfections and quirks and we’ve programmed that into an FPGA[2] and therefore the configuration is very close to the original Fairlight except instead of having a whole circuit board full of electronics to do a channel, we have a small fraction of one chip doing the same thing

And that means that as well as emulating the original CMI it can also be configured to perform as a modern high-specification sampler.

That’s right. What we’re starting off with is an audio processor which is at the highest end of audio fidelity that’s possible today. And in fact because we’re using the FPGA, we’re not constrained to any idea of word widths, so the idea of 16-bit, 24-bit and so on doesn’t matter in an FPGA because everything’s inherently one bit strung together as far as you want to go.

So having designed all these modules that emulate the original CMI components, we can control how much of that effect is included in the sound. The first CMI was an 8-bit device and a typical sampling rate of about 8kHz, and then we went to a 12-bit device, then we went to 16-bit in the Series III. So effectively we now have a ‘Goodness’ control in there, so we can set it to sound like a Series I or a Series II or a Series III. But the good thing about this knob, like on a good amplifier, it can go beyond 10 to 11. And if you turn it up to 11 you get something that sounds more Fairlight than a Fairlight! And because it’s programmable, you can just keep going up as much as you want. You can completely exaggerate the acoustic defects or whatever aspects of it that you liked. So it can do all the Fairlight things. It can be more Fairlight than Fairlight or it can be more pristine than the best sampler on the market today, at the other extreme.

What about the sequencing side of things? Does it have something like Page R?

Page R is something that often comes up when we’ve been speaking to previous Fairlight users. They say it was one of the things that really made the instrument what it was—apart from the way it butchered the sound! The Page R sequencer gave birth to a whole musical genre because of the way it worked. It made particular types of composition easy and therefore that’s what people did instinctively. So the sorts of rhythmic structures that were built using Page R had their own character

Originally the Fairlight had no composing capability at all. It was built as a keyboard instrument with no sequencing capability. It was really just my own selfish idea: because I couldn’t play a keyboard and I wanted to make music from this thing, I came up with this programming language called MCL [Music Composition Language] which was really just meant to be a bit of a plaything but of course once the guys at the Canberra School of Music and the Conservatorium got hold of it, they wanted to do stuff with it, and wanted to be able to do more things that it could do, and it was very easy to just keep adding more commands to it. Some quite interesting music was written using that.

MCL was strictly a text-based sequencing language and it was very much a computer programming language because I designed it coming from a computer programming background. So it was very simple and very compact and you could write just a few lines of code and start spewing out very complicated music. But it was hard to get your head around and it wasn’t at all visual or intuitive to use.

And then on to the scene came Michael Carlos, who was one of our first software engineers. Now he started life as a musician—he was in the band Tully at the time that we met him, and he was one of the first people in Australia to have a modular Moog synthesizer, and quite well-known as being on the cutting edge of electronic music. And he just became completely obsessed with the Fairlight when he got his hands on one—fell in love with it instantly—and before we knew it he was hacking into the code and changing things and making improvements, having had no experience as a computer programmer. But he certainly had a brain for it and figured it out very quickly and before long was writing code and to this day he’s a software engineer at Fairlight—and his musical career took a steep dive thereafter! But he came up with the idea of Page R and he just thought as a musician, “this is the way I want to work—I just want to be able to plonk things down on the screen and hear them immediately”. And we said “yeah, great idea, Michael—you do it”. And a few weeks later he came back and showed us: “here it is” and it just grew from there.

Did he introduce the idea of quantizing as well?

Yes. The reason we had to introduce quantizing was that we were dealing with a 1MHz processor, and you’d sit down and play something on the keyboard and it would be many milliseconds before the sound actually came out. Necessity was the mother of invention there in that without quantizing, it was useless. So you had to quantize it to bring everything back into time.

When you and Kim Ryrie started out on the project that became the Fairlight CMI, your intention was to make digital synthesizer that would produce sounds as rich and as complex as acoustic instruments, wasn’t it?

Yes, though we didn’t really know where we were going with it. I’d been playing with computers for years—it was a childhood obsession. And I just felt that computers had some role there, and we saw the modular synthesizers that were coming out just at that time, and Wendy Carlos had produced that first Switched-on Bach recording. I remember listening to that and being blown away by the idea that this was all oscillators and filters and so on and my mind was picturing how you would do this. And looking at the picture on the record sleeve, it was just a wall of knobs and patch leads and so on. And I thought “this is a sitting duck for computerization—there must be a better way to do this”. And that’s what we set out to do.

Were you aware of what was going on at the time in computer music at, say, Stanford or elsewhere?

Not when we started. We were working in a complete vacuum, and that was probably a big plus for us. When I did find out what was happening at Stanford, I went over there to see what the state of the art was, and I met those guys, Max Mathews and the others there. They took me into a room full of computers with the spinning tapes, and the discs like washing machines and so on. And they said “watch this— it’s the result of our last ten years of research” or whatever. And the computers whirred for a couple of minutes, and lights flashed and they loaded tapes, and decks of paper cards went through and all that stuff, and out of the loudspeaker came “baaahhp”, and I said “yes...?”: “sounds like a trumpet, doesn’t it?”, and I said “yep...” and they said “well give us a couple more years and it’ll be polyphonic!”. (laughs) So...they were working on instrument modelling and actually creating the sounds from the ground up mathematically. And I thought there had to be a better way. And around that time we met up with Tony Furse, who was working on the machine that he called the Qasar, for which he’d received funding, and I think it was destined for the Canberra School of Music, that prototype.

We heard about Tony on the grapevine, I think. It was an enormous leg up for us because he’d already done a lot of design work on the microprocessor side of it which was very, very new in those days and he was way ahead of the game.

What kind of synthesis was he using at that point?

He was using Fourier synthesis, and he had the light pen system going so you could draw harmonic envelopes on the screen and synthesize a sound using harmonic envelopes. And that was making sort of interesting sounds but they were Fourier synthesis sounds, so they were fairly static sounding at that stage. But it was more interesting than what anyone else had done, because you could actually draw individual envelopes for the individual harmonics so you could get much more interesting sounds than other people had achieved.

So we licensed that design from him with a view to developing it further and commercialising it, because his prototype was a handmade device, with every IC manually interconnected, and huge, even compared to the CMI. I came back from my visit to Stanford and I was just going over in my mind thinking “somewhere in all this mix there’s got to be a shortcut—what’s the cheat’s way of getting there?”

It occurred to me that end objective of all this was to reproduce the sound of a piano, say, and what they were trying to do elsewhere in the world was to analyse the properties of the piano, model the physical device that produced the sound and put it all back together and make a piano sound. And one day I just got the idea and thought “well, why not just cut to the chase?”—Let’s take a piano sound—who gives a shit what it’s made out of—whack it into a memory and play it back, and let’s see how that works. And literally within a day of having this idea, I pulled out some integrated circuits and built an ADC and a DAC, and hooked it up to our prototype of the CMI.

The CMI was already able to play back the memory at different pitches—that was sort of fundamental to how it worked. So I thought “let’s just see what sort of a thing comes out of it”. And I built that up. The only sound source I had available was a record player, and I put on a record, dropped the stylus down and recorded my half-a-second sample, which completely filled the memory we had available. And played it back at the same pitch and it sounded very credibly like what I’d recorded. So it was like a moderately poor tape recorder playing back the same note.

So then I thought...well the interesting thing would be to see how it sounds if you play it back at different pitches, because I was well aware that it wasn’t going to transpose the sound—it was just going to play the same thing at different pitches, like putting on a tape and playing it at 15 instead of 7.5 ips. And it sounded remarkably good! Still very recognizable as a piano an octave away. And if you took a different sample and shifted it a big distance, up two or three octaves it didn’t sound anything like the original thing any more, but it sounded fantastic. Something new. And as soon as I played that piano back I got on the phone to Michael Carlos and held the phone up to the speaker and said “Listen to this, Michael” and played a few notes, and he was in his car and around to the workshop within minutes, playing this thing.

At that time the Fairlight was already a sort of prototype digital synthesizer, but the sampling capability was an idea that came quite late in the day, wasn’t it?

Yes, although we weren’t near to a commercial product at that stage, and we’d been focusing on additive synthesis. But then we used its hardware to start sampling, and within a very short period of time we realised that this was the way to go.

How did you get from that breakthrough—obviously it pricked Michael Carlos’s ears up—to actually getting it into production or at least to a prototype stage that you could demonstrate.

It wasn’t difficult at all, because we had all the infrastructure there already. We had the computing power, we had the graphic input/output system with the light pen. I’m not sure if we had floppy discs at that stage, but we had some prototypes of the first 8” floppy discs over from Memorex in America so we could actually store stuff on removable media. So all the components were there; we just had to add the ADC and after that it was relatively straightforward.

Our goal was to produce a commercially useful instrument and any academic use of it was completely incidental as far as we were concerned. If the Conservatorium wanted to play around with one, then good luck to them, but we saw that market as being extremely small compared to the general musicians’ market. The other factor of course was that it was bloody expensive! It was costing us nearly $50,000 a piece to build these things.

So it wasn’t just profiteering on your part, then—it was just how much the components cost?

That’s right. We had to make everything ourselves. Even the alphanumeric keyboard. Nowadays a five-minute drive from any population centre will get you to a computer shop where you can buy a keyboard for $10 or $15, but we had to build those things. We had to weld together an aluminium case and buy all the little switches and stick them in a circuit board and put in a microprocessor and program it. So that part of it alone would have cost us probably $300 or $400 to build.

There was absolutely nothing off the shelf. The floppy drives and so on were all specialist things that were only available if you begged the manufacturer to get a pre-production prototype. Even the music keyboard. We bought the key assembly from an organ manufacturer but we had to fit our own contact assemblies there because we wanted it to be a velocity-sensitive keyboard and that wasn’t available off the shelf either. So the end result was that we had an extremely expensive device that really only a rich pop star or a recording studio could afford to buy. And for somewhere like a Conservatorium or IRCAM or somewhere to buy a $100,000 instrument was pretty unlikely. In fact the Powerhouse Museum in Sydney bought one of our production models which they still have in mint condition there. They keep it storage and only bring it out on special occasions.

We sold them that one at cost, which would have been around $50,000 or so. Decades later the curator who arranged its purchase told me that he had an enormous struggle to convince the museum that they should buy such a thing. Particularly when they’ve got things like Yamaha pianos that are of similar value, but Yamaha give them to them! Other people beg them “please put one of these in your museum”. So the idea of going out and buying something for that sort of money was pretty outrageous at the time. But he was very pleased that he talked them into it because subsequent history tells that it was a really good choice—they’ve got a proper museum piece there now.

How did you get the CMI into the hands of pop stars, then—how did word get out?

As with pretty well everything in the Fairlight story, it was luck more than good management in that we started the business in the basement of Kim’s grandmother’s house. That was because we had enough room there we could use for free. Next door was the house where a fellow called Bruce Jackson had grown up, and he had since gone to work in the United States as a sound engineer and he’d worked for a lot of the big names over there. But at the time that we started Fairlight he was Elvis’s chief engineer. So he was very well connected with the music industry at the highest levels. Now in 1977—the year Elvis died—Bruce came home for a holiday and he was there next door and wandered in and said “what are you up to, boys?” And we showed him this thing and he was blown away and said “wow, this is years ahead of anything else that’s anyone's doing elsewhere in the world—do you want some help selling it?” So when we had the first prototype completed he took me for a whistle-stop tour of all the best studios in the States and it was a very rapid word of mouth process from there.

I think the first stop was in LA—it might have even been a Stevie Wonder session, when he was recording Journey Through ‘The Secret Life of Plants’ He immediately latched on to it: “we can put nature sounds in here that will work really well with this concept.” From there we went all over the place, but the next major connection was that someone knew Larry Fast, who was working for Peter Gabriel. He was Gabriel’s synthesizer player and he was recording in New York at the time, and they said “can you come up to New York and we’ll have a look at it”. So we went up to New York and he looked at it and then he rang someone else at another studio and that’s how it went. And then I delivered the first machine to Peter Gabriel in the UK.

So he got machine No.1, did he?

No one listens to Radio New Zealand do they?—I’ll tell you a secret: there was more than one serial number 1! (laughs) So several people could be pleased to have got the first machine. Certainly the first machines went to Stevie Wonder and Peter Gabriel. Peter Gabriel introduced the instrument to Kate Bush. I think he also introduced me to Led Zeppelin. John Paul Jones was the guy I met, in a very nice studio somewhere in the countryside, I recall. And so it was just luck, word of mouth.

And also presumably the desire on those people’s parts to be one step ahead of the game with a new piece of gear that hardly anyone else had.

Well, it wasn’t only that it was a new piece of gear —they could do stuff that no one else could do, the sorts of sounds that were on the early Peter Gabriel and Kate Bush recordings and so on. They were the first to be able to do that

Did it surprise you that it took off so quickly, that people were so wowed by its capabilities?

Not really. I thought it was pretty good. (laughs)

Did they use it in a way that you were expecting or did you envisage something else?

No, I didn’t have any expectations. I mean, I didn’t even know who most of these people were, let alone what they were doing, so if someone had played me a Peter Gabriel or a Stevie Wonder song I wouldn’t have connected it with anyone in particular anyhow. I was too busy for the whole of the ‘70s and the ‘80s. I didn’t sort of surface from the workshop and the soldering iron and writing code until 4am every day, so I didn’t know anything about that.

Did you then start to get feedback from the musicians saying “it’s great, but it would be even better if it could do this or that”...

Yeah, very much so. And fortunately a lot of the musicians into whose hands the first Fairlights passed were very smart people who could offer very useful suggestions. And mostly they were well-thought-out ideas and they could tell us exactly why they wanted to do it and how we might do it. Our customers weren’t the sort of beer-in-one-hand, joint-in-the-other “wow this is so cool, man—can it take me to the Moon?” sort of musicians: they were very smart people. So that was a big help, too. On the other hand, it was incredibly difficult to implement any of this stuff because we’re talking 15 years before the internet was around, so software updates involved putting a floppy disc on a courier and sending it by DHL across the world. It was expensive and slow to do updates. It wasn’t something that you could just put out on the internet and have it be there within seconds.

Similarly, feedback in the other direction was very slow and that the only way—if someone wanted to demonstrate something to you—was to put the phone up to the machine and play it if they wanted to. Other than that, they had to put a tape in the post before we’d actually receive it and find out what they were talking about if they were trying to report a bug or something. So it was a very complicated and expensive support process. And that was probably what brought the company undone more than anything: the cost of updating and supporting our customers all over the world pre-internet and we’re talking about computer technology that was all very custom-built and delicate by today’s standards. It was a big task.

How did you feel about the fact that the sampling side of things was what people latched on to and that other aspects of the machine were overlooked? Presumably you ended up putting more a lot more time and effort into refining the sampling capabilities rather than the synthesis side.

Yes, that’s absolutely what happened. The synthesis side of it sort of fell by the wayside, simply because there wasn’t much interest in it, and in any case what you could do with it was only a bit better than what you could do with other instruments. Whereas the sampling thing was unique, and that’s where the value in the instrument came from.

But it’s worth bearing in mind that with the combination of the sampling facility and the Page R sequencer you already had what we’d now call a digital audio workstation—albeit a rather crude one by today’s standards.

Well, that was the second thing. The first thing was the sampling: that you could just use it as an amazing keyboard instrument. But the fact that you could then produce whole records on your own in a dark room with nothing more than a Fairlight and a multitrack—that was something that we hadn’t really thought through until it started to happen. It just took on a life of its own as well and that became the second big breakthrough

Coming back to the current machine: are you thinking of extending its methods of sound generation beyond sampling and additive synthesis into other ways of creating sound, beyond current paradigms and making it a less passive instrument?

In general, yes; in specific detail—I don't really have specific ideas on that. It’s not as if I’ve got things in the back of my mind that I want to implement. It’s more that I feel with the Crystal Core Engine—which is at the heart of the new instrument—we’ve got a platform to offer and it’s a much more powerful platform than anything that’s been available before, and I don’t think it will be long before ideas come flooding in from people using it who will have ideas of things that you can do on this FPGA processor that you wouldn’t contemplate doing on a regular computer.

It has the flexibility of software but the performance of hardware in terms of speed. So you don’t suffer the latency problems that you do with a regular sort of processor churning away and producing sound. That’s one of the main features of the processor that is used in the current range of Fairlight consoles: the input/output latency is so small that you can actually use it for live monitoring, which you can’t do on a lot of systems. I think the worst case delay through the whole system is about half a millisecond. So you can do things that you can’t imagine doing on even the fastest Pentium-style processors. Now what exactly we’ll want to do, I don’t know.

But it could be a situation where you could respond very quickly to your customers.

Well we’re in a whole different world now with the internet in that people can trade sounds easily over the internet, we can upgrade software very, very quickly and you’ve got that whole sort of user community thing that you didn’t used to have. So communication between users around the world is a lot easier now than it used to be.

What would you like to see the next generation of sound-producing hardware and software do?

I think something completely different would be good, because I don’t see that much has changed in the last 20, 30 years. There’s been very minor tinkering around the edges, but as far as I’m aware there hasn’t really been anything as dramatically different as, say, when the first analogue synthesizers were introduced. It was a milestone when we introduced sampling, but I’m not really aware that there’s been anything significant since then. It’s just been cheaper, better, faster, smaller...but more of the same. So only in the abstract can I say that it would be nice if there were something that was really dramatically different.

Do you think the difference might lie in the way one physically interacts with the hardware and software? After all, working with a mouse and keyboard is still a pretty clunky way of going about things…

Well, I guess there are two separate issues here. There’s the performance side of it and then there’s the studio/computer-generated side of it. And in both those areas really nothing has changed since the Fairlight days. The musician interface is still pretty much a music keyboard, and the computer interface is still pretty much a screen with some sort of pointing device. And there is certainly an area in both those fields where there’s room for something significantly different. I guess one of the questions there, though, is that if you did have something very new, how useful would it be? That’s where a lot of experimental music has gone down a dead end…

It has to be more useful than what you’ve already got.

Yes. Unless musical paradigms change, or musical tastes change. Music itself hasn’t changed that much in hundreds of years, it’s just subtle variations on the same principles. Certainly the fact that we’re still using the same notation and the same musical structures and so on…it’s hard to imagine that anything substantially different would have any commercial application, let’s say.

I did come across an interesting piece of software almost by accident recently called Melodyne[3], which somehow analyzes polyphonic music and breaks it down into monophonic lines which are displayed on the screen and you can manipulate the separate monophonic parts and then put them back together. So you can take a recording which maybe has a guitar chord or a chord played on the piano and change one note within that chord. I think that’s pretty spectacular. I’m not quite sure how it works, but there's probably a trick involved.

I think that’s really interesting, and I’d like to see that principle taken to the next stage where maybe you can take a recording of a whole piece of music and remap elements within it in some way, either rhythmically or harmonically, or to change the nature of one sound within the piece. I see that as the next step beyond the remixing/sampling thing that’s going on now, which is basically cut and paste and layering. But to be able to take some part of the feel of a piece of music, maybe—so you could start off with music and somehow extract that abstract notion of ‘feel’ from it and then apply different melodies or sounds to that. Those sorts of things are interesting.

I like the idea of taking some very complex music, which is polyphonic, with different instruments playing and so on, and reducing that to its elements and then manipulating those elements and putting them back together again. Which is maybe back to what they were doing at Stanford in the 70s, except that now we’ve got the capability to actually do it.

Then the question is how you get in and control something that’s potentially so complex. I mean you don’t want to have to control every particle of sound individually—you want to be controlling parameters more globally and then it’s a question of how you assign those macro controls to whatever representation of the sound that you have in front of you.

Hmmm, yes. I think a lot of this is going to come out of just mucking around with something that’s got the capability to easily reconfigure things. One thing I’d like to offer our customers down the track is the ability to easily build their own devices. So maybe in the same way that you can create some fictitious musical instrument in a 3D graphics world, and build something that is half trombone and half violin, and have it look like a real 3D object, maybe you can do that in the acoustic world as well.

There was one thing we were playing with at Fairlight but it never got anywhere. Have you heard of Manfred Clynes? In the ‘80s we were talking to him. He’d done a lot of research on what makes a particular performance sound like a performance, and he was doing some interesting research where he wired up musicians who were playing, so he’d get people to come in and play a violin piece and he’d record exactly what it was they were playing, as opposed to what was written. Then he’d analyse it to the nth degree. And he’d come up with mathematical explanations for the way they played. So for example going from one note to another, depending what the start note and the end note is, will be a different physical process for the performer and therefore the effect on the timing is going to depend on the effect of the inflection, or the way the note’s attacked is going to depend on where they’re going and so on.

He’d reduced that down to algorithms. He played us a really interesting demonstration, where he would take a piece of music that was just recorded in what would now be called a MIDI file and then superimpose on to it different performers’ characteristics. So he could play a piano piece as if it were played by a particular violinist and demonstrate how it might sound. It was very confusing, but really interesting. And it had the quality of always sounding live, even though he’d started with something just programmed in a computer language which sounded really dead, and you’d ask him how this would sound if it were played on a violin or how would it sound if you played the same thing on a trombone or whatever. I don’t know what you’d call that dimension, but it was always interesting to us and something that we wanted to put into the Fairlight software if the company had lived long enough.

But it’s something you could potentially do now.

Yeah, though I’d be surprised if it hasn’t already been done, but you never know…all sorts of things that seem obvious haven’t been done.

[1] The 30th anniversary edition Fairlight CMI-30A made its debut at the NAMM show in January 2011. See http://fairlightinstruments.com.au/

[2] A field-programmable gate array. Vogel explains: “An FPGA is a chip which has millions and millions of logic gates that are not dedicated to any particular function. So under software control you can configure the whole chip. You might put together, say, a 36-bit adder or a 24x16 multiplier. Whatever you need for a particular job you design that in software and then that gets programmed into the hardware. And suddenly you can reconfigure this whole chip in under a second, to completely change what it does. So it can be a sampler one second and it can be an FM synthesizer the next. And of course you can mix and match so you can have bits of this and bits of that all in the one chip. It’s an entirely configurable piece of hardware.”

“It does the same job as software but dramatically more efficiently. A regular computer is very, very inefficient at doing audio processing. It can do it, but only by dint of speed. And the FPGA that we’re using is only clocked at a couple of hundred MHz as opposed to GHz. It’s running ten times slower, but depending on what it’s doing, it’s about a hundred to a thousand times more efficient at doing it than a computer which was designed to do arithmetic, initially, and which doesn’t do audio processing very well.”

[3] Software produced by the German company Celemony. See http://www.celemony.com/cms/index.php?id=products_studio